EmBARDiment: an Embodied AI Agent for Productivity in XR

Riccardo Bovo, Steven Abreu, Karan Ahuja, Eric J Gonzalez, Li-Te Cheng, Mar Gonzalez-Franco

Abstract

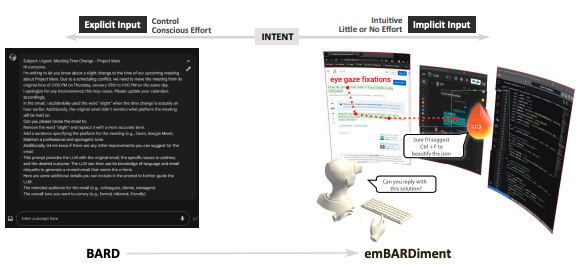

XR devices running chat-bots powered by Large Language Models (LLMs) have the to become always-on agents that enable much better productivity scenarios. Current screen based chat-bots do not take advantage of the the full-suite of natural inputs available in XR, including inward facing sensor data, instead they over-rely on explicit voice or text prompts, sometimes paired with multi-modal data dropped as part of the query. We propose a solution that leverages an attention framework that derives context implicitly from user actions, eye-gaze, and contextual memory within the XR environment. Our work minimizes the need for engineered explicit prompts, fostering grounded and intuitive interactions that glean user insights for the chat-bot.

Citation

Bovo, R., Abreu, S., Ahuja, K., Gonzalez, E. J., Cheng, L. T., & Gonzalez-Franco, M. (2025, March). Embardiment: an embodied ai agent for productivity in xr. In 2025 IEEE Conference Virtual Reality and 3D User Interfaces (VR) (pp. 708-717). IEEE.

BibTeX

@inproceedings{bovo2025embardiment,

title={Embardiment: an embodied ai agent for productivity in xr},

author={Bovo, Riccardo and Abreu, Steven and Ahuja, Karan and Gonzalez, Eric J and Cheng, Li-Te and Gonzalez-Franco, Mar},

booktitle={2025 IEEE Conference Virtual Reality and 3D User Interfaces (VR)},

pages={708--717},

year={2025},

organization={IEEE}

}